Apache Gravitino Python client

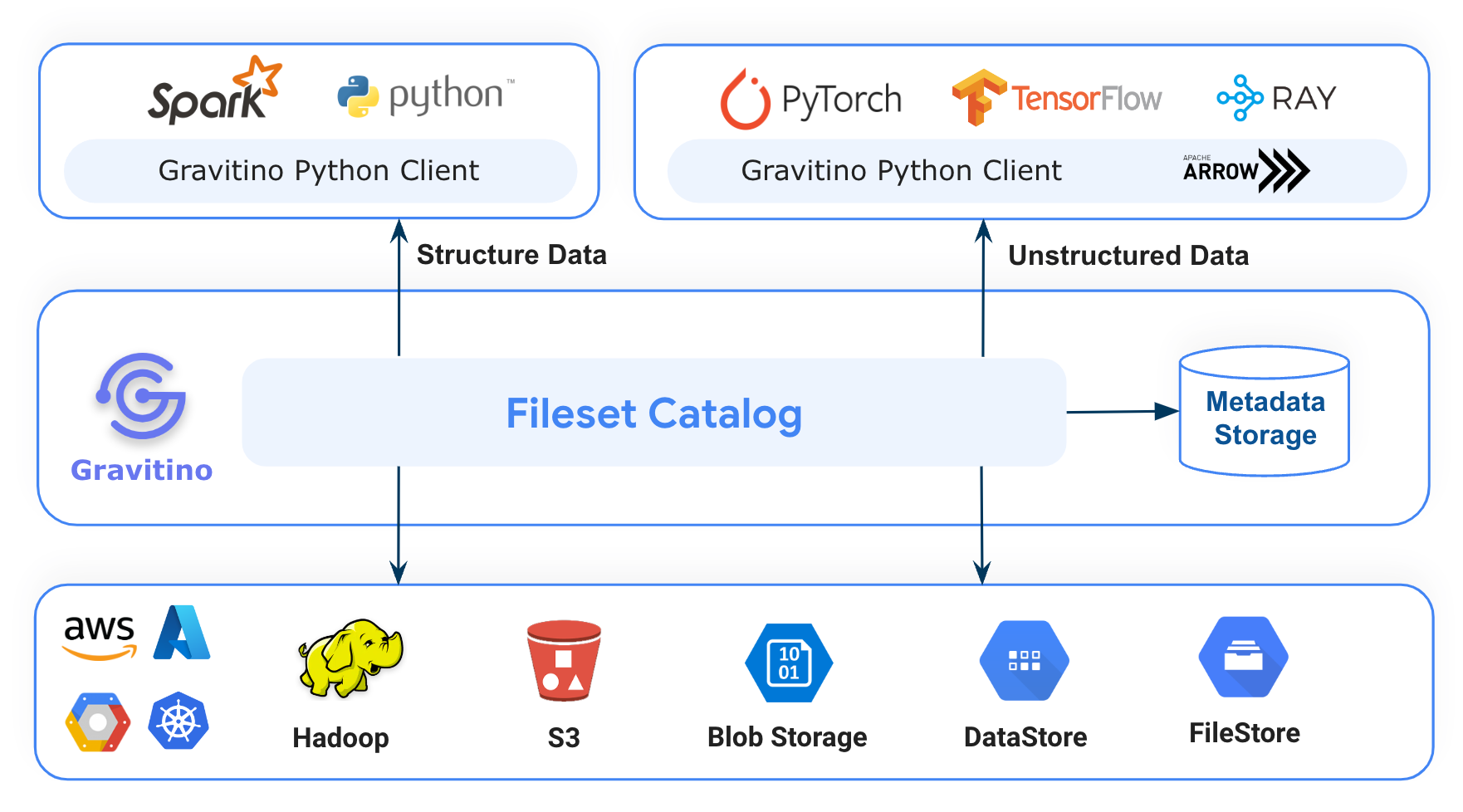

Apache Gravitino is a high-performance, geo-distributed, and federated metadata lake. It manages the metadata directly in different sources, types, and regions, also provides users the unified metadata access for data and AI assets.

Gravitino Python client helps data scientists easily manage metadata using Python language.

Use Guidance

You can use Gravitino Python client library with Spark, PyTorch, Tensorflow, Ray and Python environment.

First of all, You must have a Gravitino server set up and run, You can refer document of How to install Gravitino to build Gravitino server from source code and install it in your local.

Apache Gravitino Python client API

pip install apache-gravitino

Apache Gravitino Fileset Example

We offer a playground environment to help you quickly understand how to use Gravitino Python client to manage non-tabular data on HDFS via Fileset in Gravitino. You can refer to the document How to use the playground to launch a Gravitino server, HDFS and Jupyter notebook environment in you local Docker environment.

Waiting for the playground Docker environment to start, you can directly open

http://localhost:18888/lab/tree/gravitino-fileset-example.ipynb in the browser and run the example.

The gravitino-fileset-example contains the following code snippets:

- Install HDFS Python client.

- Create a HDFS client to connect HDFS and to do some test operations.

- Install Gravitino Python client.

- Initialize Gravitino admin client and create a Gravitino metalake.

- Initialize Gravitino client and list metalakes.

- Create a Gravitino

Catalogand specialtypeisCatalog.Type.FILESETandprovideris hadoop - Create a Gravitino

Schemawith thelocationpointed to a HDFS path, and usehdfs clientto check if the schema location is successfully created in HDFS. - Create a

Filesetwithtypeis Fileset.Type.MANAGED, usehdfs clientto check if the fileset location was successfully created in HDFS. - Drop this

Fileset.Type.MANAGEDtype fileset and check if the fileset location was successfully deleted in HDFS. - Create a

Filesetwithtypeis Fileset.Type.EXTERNAL andlocationpointed to exist HDFS path - Drop this

Fileset.Type.EXTERNALtype fileset and check if the fileset location was not deleted in HDFS.

How to development Apache Gravitino Python Client

You can ues any IDE to develop Gravitino Python Client. Directly open the client-python module project in the IDE.

Prerequisites

- Python 3.8+

- Refer to How to build Gravitino to have necessary build environment ready for building.

Build and testing

-

Clone the Gravitino project.

git clone git@github.com:apache/gravitino.git -

Build the Gravitino Python client module

./gradlew :clients:client-python:build -

Run unit tests

./gradlew :clients:client-python:test -PskipITs -

Run integration tests

Because Python client connects to Gravitino Server to run integration tests, So it runs

./gradlew compileDistribution -x testcommand automatically to compile the Gravitino project in thedistributiondirectory. When you run integration tests via Gradle command or IDE, Gravitino integration test framework (integration_test_env.py) will start and stop Gravitino server automatically../gradlew :clients:client-python:test -

Distribute the Gravitino Python client module

./gradlew :clients:client-python:distribution -

Deploy the Gravitino Python client to https://pypi.org/project/apache-gravitino/

./gradlew :clients:client-python:deploy

Resources

- Official website https://gravitino.apache.org/

- Project home on GitHub: https://github.com/apache/gravitino/

- Playground with Docker: https://github.com/apache/gravitino-playground

- User documentation: https://gravitino.apache.org/docs/

- Slack Community: https://the-asf.slack.com#gravitino

License

Gravitino is under the Apache License Version 2.0, See the LICENSE for the details.

ASF Incubator disclaimer

Apache Gravitino is an effort undergoing incubation at The Apache Software Foundation (ASF), sponsored by the Apache Incubator. Incubation is required of all newly accepted projects until a further review indicates that the infrastructure, communications, and decision making process have stabilized in a manner consistent with other successful ASF projects. While incubation status is not necessarily a reflection of the completeness or stability of the code, it does indicate that the project has yet to be fully endorsed by the ASF.